Introduction

For data lakes, in the Hadoop ecosystem, HDFS file system is used. However, most cloud providers have replaced it with their own deep storage system such as S3 or GCS. When using deep storage choosing the right file format is crucial.

These file systems or deep storage systems are cheaper than data bases but just provide basic storage and do not provide strong ACID guarantees.

You will need to choose the right storage for your use case based on your needs and budget. For example, you may use a database for ingestion if you budget permit and then once data is transformed, store it in your data lake for OLAP analysis. Or you may store everything in deep storage but a small subset of hot data in a fast storage system such as a relational database.

File Formats

Note that deep storage systems store the data as files and different file formats and compression algorithms provide benefits for certain use cases. How you store the data in your data lake is critical and you need to consider the format, compression and especially how you partition your data.

The most common formats are CSV, JSON, AVRO, Protocol Buffers, Parquet, and ORC.

Some things to consider when choosing the format are:

- The structure of your data: Some formats accept nested data such as JSON, Avro or Parquet and others do not. Even, the ones that do, may not be highly optimized for it. Avro is the most efficient format for nested data, I recommend not to use Parquet nested types because they are very inefficient. Process nested JSON is also very CPU intensive. In general, it is recommended to flat the data when ingesting it.

- Performance: Some formats such as Avro and Parquet perform better than other such JSON. Even between Avro and Parquet for different use cases one will be better than others. For example, since Parquet is a column based format it is great to query your data lake using SQL whereas Avro is better for ETL row level transformation.

- Easy to read: Consider if you need people to read the data or not. JSON or CSV are text formats and are human readable whereas more performant formats such parquet or Avro are binary.

- Compression: Some formats offer higher compression rates than others.

- Schema evolution: Adding or removing fields is far more complicated in a data lake than in a database. Some formats like Avro or Parquet provide some degree of schema evolution which allows you to change the data schema and still query the data. Tools such Delta Lake format provide even better tools to deal with changes in Schemas.

- Compatibility: JSON or CSV are widely adopted and compatible with almost any tool while more performant options have less integration points.

File Formats

- CSV: Good option for compatibility, spreadsheet processing and human readable data. The data must be flat. It is not efficient and cannot handle nested data. There may be issues with the separator which can lead to data quality issues. Use this format for exploratory analysis, POCs or small data sets.

- JSON: Heavily used in APIs. Nested format. It is widely adopted and human readable but it can be difficult to read if there are lots of nested fields. Great for small data sets, landing data or API integration. If possible convert to more efficient format before processing large amounts of data.

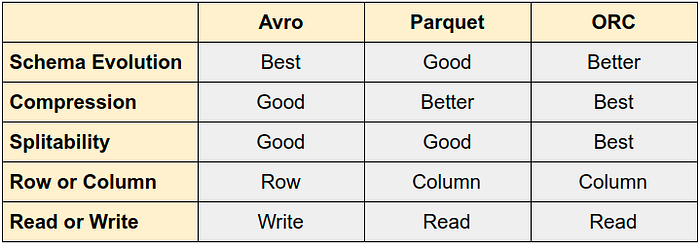

- Avro: Great for storing row data, very efficient. It has a schema and supports evolution. Great integration with Kafka. Supports file splitting. Use it for row level operations or in Kafka. Great to write data, slower to read.

- Protocol Buffers: Great for APIs, especially for gRPC. Supports Schema and it is very fast. Use for APIs or machine learning.

- Parquet: Columnar storage. It has schema support. It works very well with Hive and Spark as a way to store columnar data in deep storage that is queried using SQL. Because it stores data in columns, query engines will only read files that have the selected columns and not the entire data set as opposed to Avro. Use it as a reporting layer.

- ORC: Similar to Parquet, it offers better compression. It also provides better schema evolution support as well, but it is less popular.

File Compression

Lastly, you need to also consider how to compress the data considering the trade off between file size and CPU costs. Some compression algorithms are faster but with bigger file size and others slower but with better compression rates. For more details check this article.

I recommend using snappy for streaming data since it does not require too much CPU power. For batch bzip2 is a great option.

Conclusion

As we can see, CSV and JSON are easy to use, human readable and common formats but lack many of the capabilities of other formats, making it too slow to be used to query the data lake. ORC and Parquet are widely used in the Hadoop ecosystem to query data whereas Avro is also used outside of Hadoop, especially together with Kafka for ingestion, it is very good for row level ETL processing. Row oriented formats have better schema evolution capabilities than column oriented formats making them a great option for data ingestion.

No comments:

Post a Comment